✍🏻 Mustapha Limar

15 min read

•

Sun Aug 17, 2025

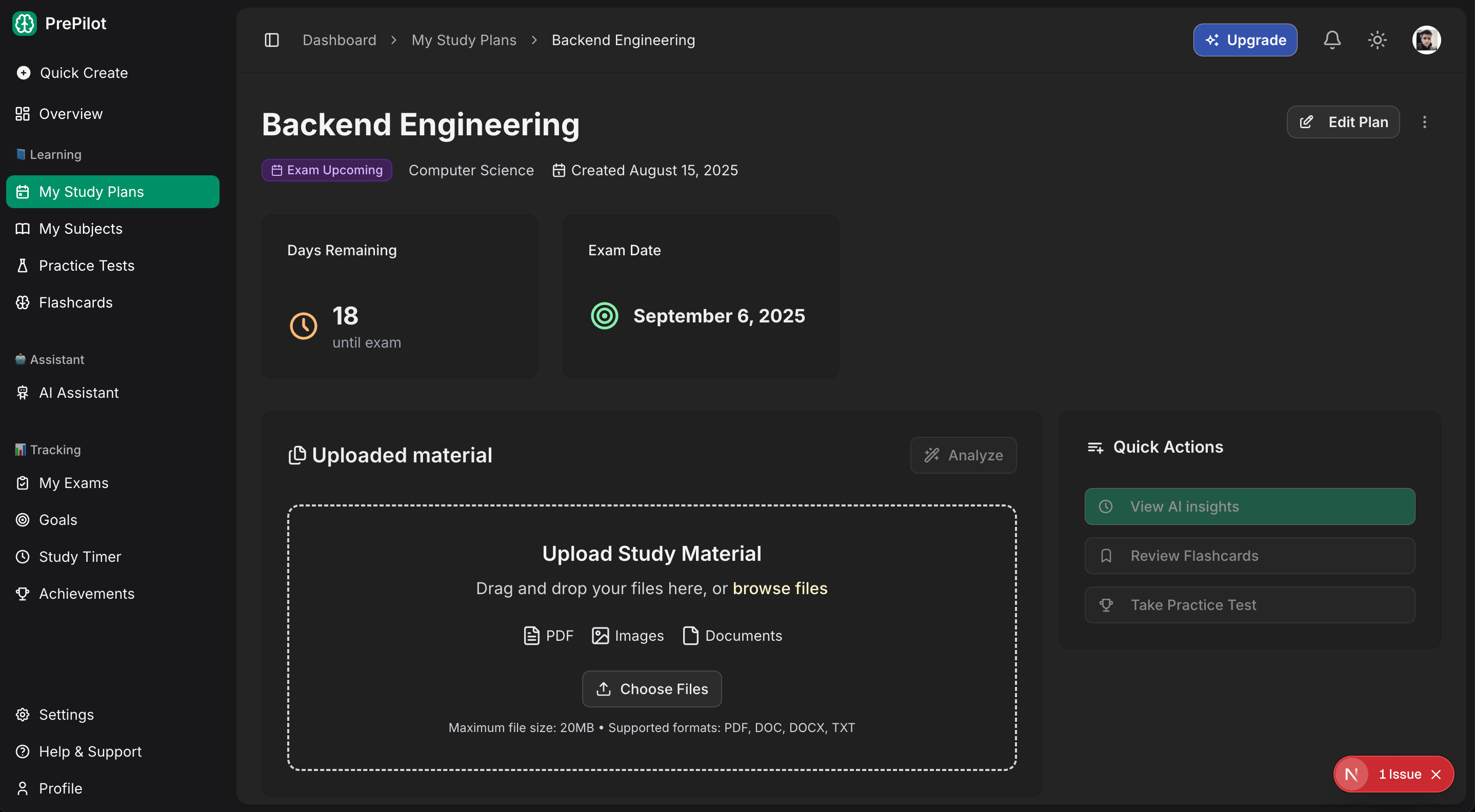

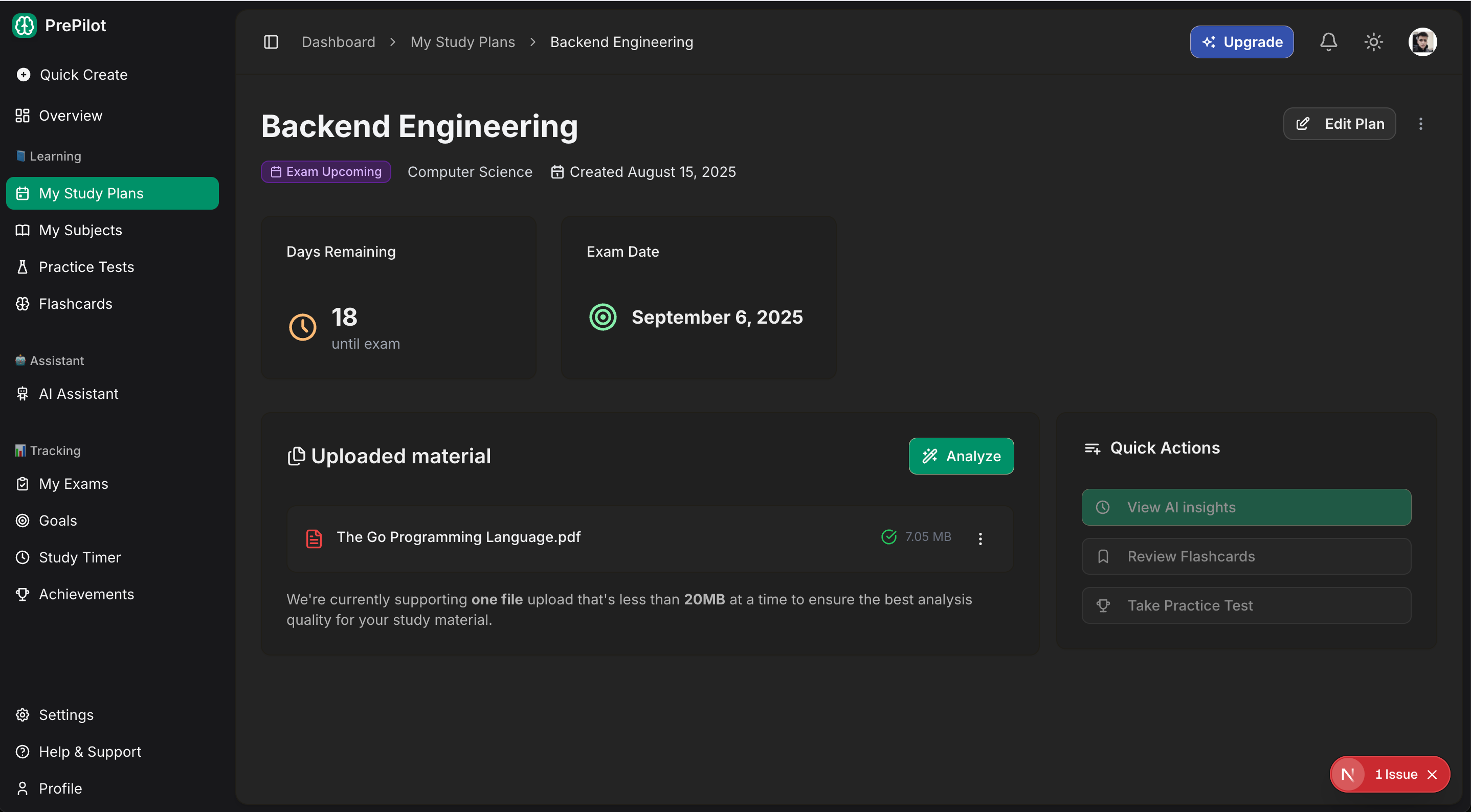

For the past couple of weeks, I have been working on a saas application that helps students prepare for their exams by providing a set of features powered by AI. One of the features I wanted to implement was the ability for students to upload their study materials, such as PDFs, images, and other documents, so that they could use them in conjunction with the AI-powered features. This turned out to be more complicated than I initially thought.

The Initial Approach: Simple Upload

When I first started implementing the file upload feature, I had a straightforward approach in mind:

- User selects a file

- Frontend sends it to the backend via HTTP

- Backend uploads to S3

- Backend creates database record

- Return success response

Simple, right? Well, not quite.

Problem #1: Context Cancellation and Timeouts

The first major issue I encountered was context cancellation errors. Here's what was happening:

Copy code

// My initial service method

func (s *StudyPlanService) UploadStudyPlanAttachment(ctx echo.Context, clerkID string, studyPlanID uuid.UUID, file *multipart.FileHeader) (*dto.StudyPlanAttachment, error) {

// 1. Upload to S3 (takes 10-30 seconds for larger files)

s3Key, err := s.awsClient.S3.UploadFile(uploadCtx, bucket, key, file)

// 2. Create database record

attachment, err := s.studyPlanRepo.UploadStudyPlanAttachment(ctx.Request().Context(), studyPlanID, userID, s3Key, ...)

return attachment, nil

}

The problem? The HTTP request context (Echo context) would timeout while waiting for the S3 upload to complete. Even though I set a 10-minute timeout for the S3 upload, the HTTP request context had its own timeout, causing the database update to fail with "context canceled" errors.

Copy code

failed to update attachment download key error="failed to update attachment download_key: context canceled"Problem #2: Misleading User Experience

Even when the upload "succeeded," users were getting confusing feedback. The frontend would show:

- Progress bar reaches 100% ✅

- "Upload successful!" toast ✅

- File appears in the list ✅

But then users would try to download the file and get errors because the S3 upload was still in progress in the background. The progress bar only reflected the HTTP request completion, not the actual file upload to storage.

Problem #3: Race Conditions and Duplicates

When I tried to fix the context issue by making the S3 upload asynchronous, I introduced new problems:

- Database record created immediately (empty downloadKey)

- S3 upload happens in background

- Frontend shows "success" but file isn't actually ready

- Users see duplicate entries appearing/disappearing

The Solution: Direct Frontend-to-S3 Upload

After several iterations and learning from the asynchronous approach, I implemented a much cleaner solution: direct frontend-to-S3 uploads using presigned URLs. This approach eliminates all the context cancellation issues and provides a much better user experience.

How It Works

- Frontend Requests Presigned URL

Copy code

// Step 1: Get presigned URL for direct S3 upload

const presignedResponse = await fetch("/api/study-materials", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({

fileName: file.name,

fileSize: file.size,

fileType: file.type,

}),

});

const { data: presignedData } = await presignedResponse.json();- Direct S3 Upload from Frontend

Copy code

// Step 2: Upload directly to S3 using presigned URL

const uploadResponse = await fetch(presignedData.presignedUrl, {

method: "PUT",

body: file,

headers: {

"Content-Type": file.type,

},

});- Create Database Record

Copy code

// Step 3: Create database record with S3 metadata

const attachmentData = {

fileName: file.name,

fileSize: file.size,

fileType: file.type,

fileKey: presignedData.fileKey,

fileUrl: presignedData.fileUrl,

};

const response = await apiClient.post(

`/v1/study-plans/${planId}/attachments`,

attachmentData,

{

headers: { "Content-Type": "application/json" },

}

);Backend Changes

The backend now only handles database operations, making it much simpler and more reliable:

Copy code

// Simplified backend service - no more S3 upload handling

func (s *StudyPlanService) UploadStudyPlanAttachment(ctx echo.Context, clerkID string, studyPlanID uuid.UUID, payload *dto.CreateAttachmentPayload) (*dto.StudyPlanAttachment, error) {

user, err := s.userRepo.GetUserByClerkID(clerkID)

if err != nil {

return nil, fmt.Errorf("user not found: %w", err)

}

// Verify study_plan exists and belongs to user

_, err = s.studyPlanRepo.CheckStudyPlanExists(ctx.Request().Context(), user.ID, studyPlanID)

if err != nil {

return nil, err

}

// Create attachment record with S3 metadata from frontend

attachment, err := s.studyPlanRepo.CreateStudyPlanAttachment(

ctx.Request().Context(),

studyPlanID,

user.ID,

payload.FileName,

payload.FileSize,

payload.FileType,

payload.FileKey, // S3 key from frontend upload

)

return attachment, nil

}Frontend Progress Tracking

The new approach provides accurate progress tracking:

Copy code

const uploadMutation = useMutation({

mutationFn: ({

file,

progress,

}: {

file: File;

progress: (p: number) => void;

}) => uploadAttachment(planId, file, progress),

onSuccess: (response, variables) => {

const { file } = variables;

const attachment = response.data;

if (attachment) {

// Since S3 upload is already complete and DB record is created,

// we can immediately mark as completed

setUploadedFiles((prev) =>

prev.map((f) =>

f.name === file.name

? {

...f,

uploadPhase: "completed",

uploadProgress: 100,

attachmentId: attachment.id,

isUploading: false,

isCompleted: true,

}

: f

)

);

toast.success("File uploaded successfully!");

if (onUploadSuccess && attachment) {

onUploadSuccess(attachment);

// Clear the temporary file immediately since the attachment is now in the main list

setUploadedFiles([]);

}

}

},

});Progress Phases

Copy code

const getUploadStatusText = (file: UploadedFile) => {

switch (file.uploadPhase) {

case "s3_uploading":

return `Uploading to storage... ${file.uploadProgress}%`;

case "creating_record":

return "Creating record...";

case "completed":

return "Upload complete!";

case "failed":

return "Upload failed";

default:

return `Uploading... ${file.uploadProgress}%`;

}

};Key Benefits of the New Approach

- No More Context Cancellation

- No backend S3 uploads = no context timeout issues

- Frontend handles upload directly to S3

- Backend only does database operations (fast and reliable)

- Accurate Progress Tracking

- Real upload progress from frontend to S3

- Clear phase indicators (uploading → creating record → complete)

- No misleading "success" messages

- Better Performance

- Direct S3 upload eliminates backend bandwidth usage

- Reduced server load - backend doesn't handle file data

- Faster response times for database operations

- Improved User Experience

- Immediate feedback when upload completes

- No duplicate entries or flickering

- Clear status indicators with animated icons

- Simplified Architecture

- Separation of concerns - frontend handles files, backend handles data

- Easier to debug - clear flow from frontend to S3 to backend

- More scalable - backend doesn't need to handle large file uploads

The Final Implementation

The complete upload flow now works like this:

- User selects file → Frontend validates file type and size

- Request presigned URL → Backend generates S3 upload URL

- Upload to S3 → Frontend uploads directly to S3 with progress tracking

- Create database record → Backend creates attachment record with S3 metadata

- Update UI → Frontend shows completion and adds file to list

Copy code

// Complete upload function with progress tracking

export async function uploadAttachment(

planId: string,

file: File,

onProgress: (p: number) => void

) {

// Step 1: Get presigned URL for direct S3 upload

onProgress(5);

const presignedResponse = await fetch("/api/study-materials", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({

fileName: file.name,

fileSize: file.size,

fileType: file.type,

}),

});

const { data: presignedData } = await presignedResponse.json();

onProgress(10);

// Step 2: Upload directly to S3 using presigned URL

const uploadResponse = await fetch(presignedData.presignedUrl, {

method: "PUT",

body: file,

headers: { "Content-Type": file.type },

});

onProgress(80); // S3 upload complete

// Step 3: Create database record with S3 metadata

const attachmentData = {

fileName: file.name,

fileSize: file.size,

fileType: file.type,

fileKey: presignedData.fileKey,

fileUrl: presignedData.fileUrl,

};

onProgress(90); // Creating database record

const response = await apiClient.post(

`/v1/study-plans/${planId}/attachments`,

attachmentData,

{ headers: { "Content-Type": "application/json" } }

);

onProgress(100); // Complete

return response;

}Key Lessons Learned

- Direct Uploads Are Better

- Presigned URLs eliminate backend file handling

- Better performance and user experience

- Simpler architecture with clear separation of concerns

- Progress Tracking Matters

- Accurate progress builds user trust

- Phase-based feedback provides clear status updates

- Visual indicators (animations, icons) enhance UX

- Architecture Decisions Impact Everything

- Initial backend upload approach caused cascading issues

- Direct frontend upload solved multiple problems at once

- Sometimes the "obvious" solution isn't the best one

- User Experience Trumps Technical Simplicity

- Immediate feedback is crucial for file uploads

- Clear status messages prevent user confusion

- Smooth transitions between upload phases

Conclusion

What started as a "simple file upload feature" turned into a complex journey through different architectural approaches. The final solution using direct frontend-to-S3 uploads with presigned URLs is not only more reliable but also provides a much better user experience.

The key insight was that trying to handle file uploads through the backend introduced unnecessary complexity and performance issues. By leveraging S3's presigned URL feature, we eliminated context cancellation problems, improved performance, and created a more maintainable architecture.

For anyone implementing similar features, I'd recommend starting with the direct upload approach from the beginning. While it might seem more complex initially, it actually simplifies the overall architecture and provides a much better user experience. The presigned URL approach is a well-established pattern that scales well and eliminates many of the edge cases that arise when handling file uploads through backend servers.

The journey taught me that sometimes the best solution isn't the most obvious one, and that user experience considerations should drive architectural decisions, especially for features like file uploads where users need clear, accurate feedback about what's happening with their files.